Why Are Artificial Neural Networks Important

Artificial Neural networks are an extremely complicated piece of research/technology being developed for decades. They use man-made computing power to try and replicate the way the human brain calculates, and hence, may be a little dense to grasp at the first go.

In this two-part post, we will understand the definition of neural networks, just what is the "artificial" component, and their involvement/use in data science.

Let's begin with a neural network. A copycat version of the human brain, scientists have been at it since the 1940s – trying to replicate the mathematics of the brain in a lab.

A simplistic explanation would be to call it a group of algorithms designed to recognize patterns in data, just like the brain.

To understand Artificial Neural networks, one must know how the brain works

To understand neural networks, one must also understand the basic structure of the human brain. The brain is a collection of interlinking neurons. Each neuron analyses the outputs of the other neurons, and then either "fires" (sends out an output) or does not.

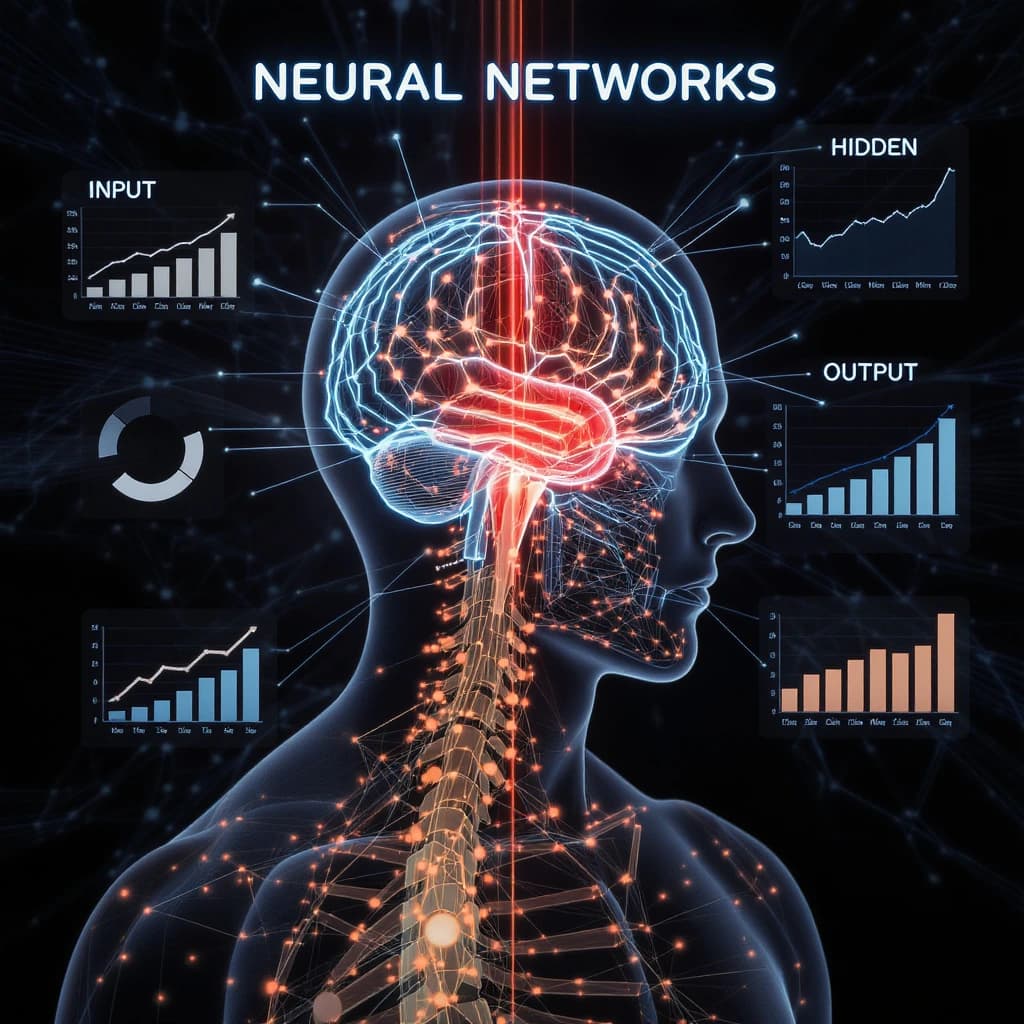

So, like in the human brain, "artificial" (man-made) neural networks also consist of linked "artificial" neurons which try and perform similar mathematical calculations. A neural network consists of layers: input, hidden, and output. Each has nodes, loosely modeled on the lines of the neurons in the brain.

Data alone does not drive your business. Decisions do. Speak to Our Experts to get a lowdown on how artificial neural networks are important.

Each neuron is accountable for classifying a single feature. It relies on the other neuron to do its job correctly to make an accurate decision eventually.

With the advent of machine learning, they are used largely as a deep-learning tool, a sub-field of data science, to solve problems like handwriting recognition and face detection by extracting "hidden patterns" within the data. This is especially useful for extracting patterns from images, videos, or speech.

It is an artificial technique used for the classification/prediction of any variable, just like the human brain tries to predict values.

Running on machine learning techniques largely used for predictive analytics, neural networks are a part of larger machine-learning applications involving algorithms for reinforcement learning, classification, and regression.

For mainstream data scientists, it is one of many ways to solve a business problem that involves copious amounts of data.

One of the better explanations of artificial neural networks is given by Luke Dormehl in Digital Trends. He writes:

While neural networks (also called perceptrons) ….it is only in the last few decades where they have become a major part of artificial intelligence. This is due to the arrival of a technique called "backpropagation," which allows networks to adjust their hidden layers of neurons in situations where the outcome doesn't match what the creator is hoping for — like a network designed to recognize dogs, which misidentifies a cat, for example.

Another important advance has been the arrival of deep learning neural networks, in which different layers of a multilayer network extract different features until it can recognize what it is looking for.

So the reason why artificial neural networks may soon go mainstream is the arrival of deep learning neural networks, in which different layers of a multilayer network extract different features until it can recognize what it is looking for.

🚀 Transform Your Business using Express Analytics' Machine Learning Solutions

Contact usHow does an Artificial Neural Network make Predictions?

Artificial neural networks decode sensory data through machine learning techniques. It clusters raw input to produce output with labels.

Here's an even deeper explanation: There's a basic building block of one at the heart of a neural network. It's called a "perceptron", not to be confused with a neuron. A perceptron is necessary since it decodes complex inputs into smaller portions of information.

An example would be taking the image of a person's face and breaking it down to, just as a human would, facial features like eyes, nose, etc. Each one of these then becomes a perceptron in a single layer. These features can be broken up into smaller features in the next layer.

For example, a left and right eye, upper and lower lip, and so on. By drilling down, the machine soon has the building blocks of a face. A neuron is more a "generalization" of a perceptron.

You can think of neural networks as a clustering and classification layer on top of your database. How? From inputs, this technique helps to group unlabeled data according to similarities. It also clubs together similar groups of data when it has a labeled dataset to work from.

Artificial neural networks are trained to find correlations. According to this formula by pathmind.com deep learning maps inputs to outputs.

It is known as a "universal approximator", because it can learn to approximate an unknown function f(x) = y between any input x and any output y, assuming they are related at all (by correlation or causation, for example). In the process of learning, a neural network finds the right f, or the correct manner of transforming x into y, whether that be f(x) = 3x + 12 or f(x) = 9x – 0.1.